GitLab Duo Chat

GitLab Duo Chat aims to assist users with AI in ideation and creation tasks as well as in learning tasks across the entire Software Development Lifecycle (SDLC) to make them faster and more efficient.

Chat is a part of the GitLab Duo offering.

Chat can answer different questions and perform certain tasks. It's done with the help of prompts and tools.

To answer a user's question asked in the Chat interface, GitLab sends a GraphQL request to the Rails backend. Rails backend sends then instructions to the Large Language Model (LLM) through the AI Gateway.

Which use cases lend themselves most to contributing to Chat?

We aim to employ the Chat for all use cases and workflows that can benefit from a conversational interaction between a user and an AI that is driven by a large language model (LLM). Typically, these are:

- Creation and ideation task as well as Learning tasks that are more effectively and more efficiently solved through iteration than through a one-shot interaction.

- Tasks that are typically satisfiable with one-shot interactions but that might need refinement or could turn into a conversation.

- Among the latter are tasks where the AI may not get it right the first time but where users can easily course correct by telling the AI more precisely what they need. For instance, "Explain this code" is a common question that most of the time would result in a satisfying answer, but sometimes the user may have additional questions.

- Tasks that benefit from the history of a conversation, so neither the user nor the AI need to repeat themselves.

The chat aims to be context aware and ultimately have access to all the resources in GitLab that the user has access to. Initially, this context was limited to the content of individual issues and epics, as well as GitLab documentation. Since then additional contexts have been added, such as code selection and code files. Currently, work is underway contributing vulnerability context and pipeline job context, so that users can ask questions about these contexts.

To scale the context awareness and hence to scale creation, ideation, and learning use cases across the entire DevSecOps domain, the Duo Chat team welcomes contributions to the chat platform from other GitLab teams and the wider community. They are the experts for the use cases and workflows to accelerate.

Which use cases are better implemented as stand-alone AI features or at least also as stand-alone AI features?

- Narrowly scoped tasks that be can accelerated by deeply integrating AI into an existing workflow.

- That can't benefit from conversations with AI.

To make this more tangible, here is an example.

Generating a commit message based on the changes is best implemented into the commit message writing workflow.

- Without AI, commit message writing may take ten seconds.

- When autopopulating an AI-generated commit message in the Commit message field in the IDE, this brings the task down to one second.

Using Chat for commit message writing would probably take longer than writing the message oneself. The user would have to switch to the Chat window, type the request and then copy the result into the commit message field.

That said, it does not mean that Chat can't write commit messages, nor that it would be prevented from doing so. If Chat has the commit context (which may be added at some point for reasons other than commit message writing), the user can certainly ask to do anything with this commit content, including writing a commit message. But users are certainly unlikely to do that with Chat as they would only loose time. Note: the resulting commit messages may be different if created from chat with a prompt written by the user vs. a static prompt behind a purpose-built commit message creation.

Set up GitLab Duo Chat

To set up Duo Chat locally, go through the general setup instructions for AI features.

Working with GitLab Duo Chat

Prompts are the most vital part of GitLab Duo Chat system. Prompts are the instructions sent to the LLM to perform certain tasks.

The state of the prompts is the result of weeks of iteration. If you want to change any prompt in the current tool, you must put it behind a feature flag.

If you have any new or updated prompts, ask members of Duo Chat team or AI Framework team to review, because they have significant experience with them.

Troubleshooting

When working with Chat locally, you might run into an error. Most commons problems are documented in this section. If you find an undocumented issue, you should document it in this section after you find a solution.

| Problem | Solution |

|---|---|

| There is no Chat button in the GitLab UI. | Make sure your user is a part of a group with enabled experimental and beta features. |

| Chat replies with "Forbidden by auth provider" error. | Backend can't access LLMs. Make sure your AI Gateway is set up correctly. |

| Requests take too long to appear in UI | Consider restarting Sidekiq by running gdk restart rails-background-jobs. If that doesn't work, try gdk kill and then gdk start. Alternatively, you can bypass Sidekiq entirely. To do that temporary alter Llm::CompletionWorker.perform_async statements with Llm::CompletionWorker.perform_inline

|

| There is no chat button in GitLab UI when GDK is running on non-SaaS mode | You do not have cloud connector access token record or seat assigned. To create cloud connector access record, in rails console put following code: CloudConnector::Access.new(data: { available_services: [{ name: "duo_chat", serviceStartTime: ":date_in_the_future" }] }).save. |

Contributing to GitLab Duo Chat

From the code perspective, Chat is implemented in the similar fashion as other AI features. Read more about GitLab AI Abstraction layer.

The Chat feature uses a zero-shot agent that includes a system prompt explaining how the large language model should interpret the question and provide an answer. The system prompt defines available tools that can be used to gather information to answer the user's question.

The zero-shot agent receives the user's question and decides which tools to use to gather information to answer it. It then makes a request to the large language model, which decides if it can answer directly or if it needs to use one of the defined tools.

The tools each have their own prompt that provides instructions to the large language model on how to use that tool to gather information. The tools are designed to be self-sufficient and avoid multiple requests back and forth to the large language model.

After the tools have gathered the required information, it is returned to the zero-shot agent, which asks the large language model if enough information has been gathered to provide the final answer to the user's question.

Customizing interaction with GitLab Duo Chat

You can customize user interaction with GitLab Duo Chat in several ways.

Programmatically open GitLab Duo Chat

To provide users with a more dynamic way to access GitLab Duo Chat, you can integrate functionality directly into their applications to open the GitLab Duo Chat interface. The following example shows how to open the GitLab Duo Chat drawer by using an event listener and the GitLab Duo Chat global state:

import { helpCenterState } from '~/super_sidebar/constants';

myFancyToggleToOpenChat.addEventListener('click', () => {

helpCenterState.showTanukiBotChatDrawer = true;

});Initiating GitLab Duo Chat with a pre-defined prompt

In some scenarios, you may want to direct users towards a specific topic or query when they open GitLab Duo Chat. The following example method:

- Opens the GitLab Duo Chat drawer.

- Sends a pre-defined prompt to GitLab Duo Chat.

import chatMutation from 'ee/ai/graphql/chat.mutation.graphql';

import { helpCenterState } from '~/super_sidebar/constants';

[...]

methods: {

openChatWithPrompt() {

const myPrompt = "What is the meaning of life?"

helpCenterState.showTanukiBotChatDrawer = true;

this.$apollo

.mutate({

mutation: chatMutation,

variables: {

question: myPrompt,

resourceId: this.resourceId,

},

})

.catch((error) => {

// handle potential errors here

});

}

}This enhancement allows for a more tailored user experience by guiding the conversation in GitLab Duo Chat towards predefined areas of interest or concern.

Adding a new tool

To add a new tool:

-

Create files for the tool in the

ee/lib/gitlab/llm/chain/tools/folder. Use existing tools likeissue_identifierorresource_readeras a template. -

Write a class for the tool that includes:

- Name and description of what the tool does

- Example questions that would use this tool

- Instructions for the large language model on how to use the tool to gather information - so the main prompts that this tool is using.

-

Test and iterate on the prompt using RSpec tests that make real requests to the large language model.

-

Implement code in the tool to parse the response from the large language model and return it to the zero-shot agent.

-

Add the new tool name to the

toolsarray inee/lib/gitlab/llm/completions/chat.rbso the zero-shot agent knows about it. -

Add tests by adding questions to the test-suite for which the new tool should respond to. Iterate on the prompts as needed.

The key things to keep in mind are properly instructing the large language model through prompts and tool descriptions, keeping tools self-sufficient, and returning responses to the zero-shot agent. With some trial and error on prompts, adding new tools can expand the capabilities of the Chat feature.

There are available short videos covering this topic.

Debugging

To gather more insights about the full request, use the Gitlab::Llm::Logger file to debug logs.

The default logging level on production is INFO and must not be used to log any data that could contain personal identifying information.

To follow the debugging messages related to the AI requests on the abstraction layer, you can use:

export LLM_DEBUG=1

gdk start

tail -f log/llm.logTracing with LangSmith

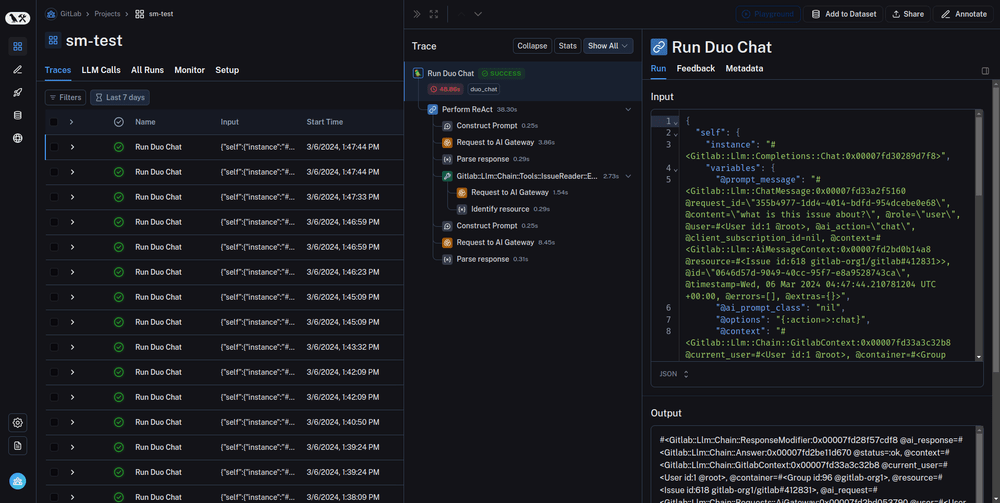

Tracing is a powerful tool for understanding the behavior of your LLM application. LangSmith has best-in-class tracing capabilities, and it's integrated with GitLab Duo Chat. Tracing can help you track down issues like:

- I'm new to GitLab Duo Chat and would like to understand what's going on under the hood.

- Where exactly the process failed when you got an unexpected answer.

- Which process was a bottle neck of the latency.

- What tool was used for an ambiguous question.

Tracing is especially useful for evaluation that runs GitLab Duo Chat against large dataset. LangSmith integration works with any tools, including Prompt Library and RSpec tests.

Use tracing with LangSmith

NOTE: Tracing is available in Development and Testing environment only. It's not available in Production environment.

-

Access to LangSmith site and create an account (You can also be added to GitLab organization).

-

Create an API key (be careful where you create API key - they can be created in personal namespace or in GL namespace).

-

Set the following environment variables in GDK. You can define it in

env.runitor directlyexportin the terminal.export LANGCHAIN_TRACING_V2=true export LANGCHAIN_API_KEY='<your-api-key>' export LANGCHAIN_PROJECT='<your-project-name>' export LANGCHAIN_ENDPOINT='api.smith.langchain.com' export GITLAB_RAILS_RACK_TIMEOUT=180 # Extending puma timeout for using LangSmith with Prompt Library as the evaluation tool.

Project name is the existing project in LangSmith or new one. It's enough to put new name in the environment variable - project will be created during request.

- Restart GDK.

- Ask any question to chat.

- Observe project in the LangSmith page > Projects > [Project name]. 'Runs' tab should contain your last requests.

Testing GitLab Duo Chat

Because the success of answers to user questions in GitLab Duo Chat heavily depends on toolchain and prompts of each tool, it's common that even a minor change in a prompt or a tool impacts processing of some questions.

To make sure that a change in the toolchain doesn't break existing functionality, you can use the following RSpec tests to validate answers to some predefined questions when using real LLMs:

-

ee/spec/lib/gitlab/llm/completions/chat_real_requests_spec.rbThis test validates that the zero-shot agent is selecting the correct tools for a set of Chat questions. It checks on the tool selection but does not evaluate the quality of the Chat response. -

ee/spec/lib/gitlab/llm/chain/agents/zero_shot/qa_evaluation_spec.rbThis test evaluates the quality of a Chat response by passing the question asked along with the Chat-provided answer and context to at least two other LLMs for evaluation. This evaluation is limited to questions about issues and epics only. Learn more about the GitLab Duo Chat QA Evaluation Test.

If you are working on any changes to the GitLab Duo Chat logic, be sure to run the GitLab Duo Chat CI jobs the merge request that contains your changes. Some of the CI jobs must be manually triggered.

Testing locally

To run the QA Evaluation test locally, the following environment variables must be exported:

ANTHROPIC_API_KEY='your-key' VERTEX_AI_PROJECT='your-project-id' REAL_AI_REQUEST=1 bundle exec rspec ee/spec/lib/gitlab/llm/completions/chat_real_requests_spec.rbTesting with CI

The following CI jobs for GitLab project run the tests tagged with real_ai_request:

-

rspec-ee unit gitlab-duo-chat-zeroshot: the job runsee/spec/lib/gitlab/llm/completions/chat_real_requests_spec.rb. The job must be manually triggered and is allowed to fail. -

rspec-ee unit gitlab-duo-chat-qa: The job runs the QA evaluation tests inee/spec/lib/gitlab/llm/chain/agents/zero_shot/qa_evaluation_spec.rb. The job must be manually triggered and is allowed to fail. Read about GitLab Duo Chat QA Evaluation Test. -

rspec-ee unit gitlab-duo-chat-qa-fast: The job runs a single QA evaluation test fromee/spec/lib/gitlab/llm/chain/agents/zero_shot/qa_evaluation_spec.rb. The job is always run and not allowed to fail. Although there's a chance that the QA test still might fail, it is cheap and fast to run and intended to prevent a regression in the QA test helpers. -

rspec-ee unit gitlab-duo pg14: This job runs tests to ensure that the GitLab Duo features are functional without running into system errors. The job is always run and not allowed to fail. This job does NOT conduct evaluations. The quality of the feature is tested in the other jobs such as QA jobs.

Management of credentials and API keys for CI jobs

All API keys required to run the rspecs should be masked

The exception is GCP credentials as they contain characters that prevent them from being masked. Because the CI jobs need to run on MR branches, GCP credentials cannot be added as a protected variable and must be added as a regular CI variable. For security, the GCP credentials and the associated project added to GitLab project's CI must not be able to access any production infrastructure and sandboxed.

GitLab Duo Chat QA Evaluation Test

Evaluation of a natural language generation (NLG) system such as GitLab Duo Chat is a rapidly evolving area with many unanswered questions and ambiguities.

A practical working assumption is LLMs can generate a reasonable answer when given a clear question and a context. With the assumption, we are exploring using LLMs as evaluators to determine the correctness of a sample of questions to track the overall accuracy of GitLab Duo Chat's responses and detect regressions in the feature.

For the discussions related to the topic, see the merge request and the issue.

The current QA evaluation test consists of the following components.

Epic and issue fixtures

The fixtures are the replicas of the public issues and epics from projects and groups owned by GitLab.

The internal notes were excluded when they were sampled. The fixtures have been commited into the canonical gitlab repository.

See the snippet used to create the fixtures.

RSpec and helpers

-

The RSpec file and the included helpers invoke the Chat service, an internal interface with the question.

-

After collecting the Chat service's answer, the answer is injected into a prompt, also known as an "evaluation prompt", that instructs a LLM to grade the correctness of the answer based on the question and a context. The context is simply a JSON serialization of the issue or epic being asked about in each question.

-

The evaluation prompt is sent to two LLMs, Claude and Vertex.

-

The evaluation responses of the LLMs are saved as JSON files.

-

For each question, RSpec will regex-match for

CORRECTorINCORRECT.

Collection and tracking of QA evaluation with CI/CD automation

The gitlab project's CI configurations have been setup to run the RSpec,

collect the evaluation response as artifacts and execute

a reporter script

that automates collection and tracking of evaluations.

When rspec-ee unit gitlab-duo-chat-qa job runs in a pipeline for a merge request,

the reporter script uses the evaluations saved as CI artifacts

to generate a Markdown report and posts it as a note in the merge request.

To keep track of and compare QA test results over time, you must manually

run the rspec-ee unit gitlab-duo-chat-qa on the master the branch:

- Visit the new pipeline page.

- Select "Run pipeline" to run a pipeline against the

masterbranch - When the pipeline first starts, the

rspec-ee unit gitlab-duo-chat-qajob under the "Test" stage will not be available. Wait a few minutes for other CI jobs to run and then manually kick off this job by selecting the "Play" icon.

When the test runs on master, the reporter script posts the generated report as an issue,

saves the evaluations artifacts as a snippet, and updates the tracking issue in

GitLab-org/ai-powered/ai-framework/qa-evaluation#1

in the project GitLab-org/ai-powered/ai-framework/qa-evaluation.

GraphQL Subscription

The GraphQL Subscription for Chat behaves slightly different because it's user-centric. A user could have Chat open on multiple browser tabs, or also on their IDE.

We therefore need to broadcast messages to multiple clients to keep them in sync. The aiAction mutation with the chat action behaves the following:

- All complete Chat messages (including messages from the user) are broadcasted with the

userId,aiAction: "chat"as identifier. - Chunks from streamed Chat messages and currently used tools are broadcasted with the

userId,resourceId, and theclientSubscriptionIdfrom the mutation as identifier.

Examples of GraphQL Subscriptions in a Vue component:

-

Complete Chat message

import aiResponseSubscription from 'ee/graphql_shared/subscriptions/ai_completion_response.subscription.graphql'; [...] apollo: { $subscribe: { aiCompletionResponse: { query: aiResponseSubscription, variables() { return { userId, // for example "gid://gitlab/User/1" aiAction: 'CHAT', }; }, result({ data }) { // handle data.aiCompletionResponse }, error(err) { // handle error }, }, }, -

Streamed Chat message

import aiResponseSubscription from 'ee/graphql_shared/subscriptions/ai_completion_response.subscription.graphql'; [...] apollo: { $subscribe: { aiCompletionResponseStream: { query: aiResponseSubscription, variables() { return { userId, // for example "gid://gitlab/User/1" resourceId, // can be either a resourceId (on Issue, Epic, etc. items), or userId clientSubscriptionId // randomly generated identifier for every message htmlResponse: false, // important to bypass HTML processing on every chunk }; }, result({ data }) { // handle data.aiCompletionResponse }, error(err) { // handle error }, }, },

Note that we still broadcast chat messages and currently used tools using the userId and resourceId as identifier.

However, this is deprecated and should no longer be used. We want to remove resourceId on the subscription as part of this issue.

Duo Chat GraphQL queries

-

Visit GraphQL explorer.

-

Execute the

aiActionmutation. Here is an example:mutation { aiAction( input: { chat: { resourceId: "gid://gitlab/User/1", content: "Hello" } } ){ requestId errors } } -

Execute the following query to fetch the response:

query { aiMessages { nodes { requestId content role timestamp chunkId errors } } }

If you can't fetch the response, check graphql_json.log,

sidekiq_json.log, llm.log or modelgateway_debug.log if it contains error

information.

Testing GitLab Duo Chat in production-like environments

GitLab Duo Chat is enabled in the Staging and Staging Ref GitLab environments.

Because GitLab Duo Chat is currently only available to members of groups in the Premium and Ultimate tiers, Staging Ref may be an easier place to test changes as a GitLab team member because you can make yourself an instance Admin in Staging Ref and, as an Admin, easily create licensed groups for testing.

Product Analysis

To better understand how the feature is used, each production user input message is analyzed using LLM and Ruby, and the analysis is tracked as a Snowplow event.

The analysis can contain any of the attributes defined in the latest iglu schema.

- All possible "category" and "detailed_category" are listed here.

- The following is yet to be implemented:

- "is_proper_sentence"

- The following are deprecated:

- "number_of_questions_in_history"

- "length_of_questions_in_history"

- "time_since_first_question"

Dashboards can be created to visualize the collected data.

How access_duo_chat policy works

This table describes the requirements for the access_duo_chat policy to

return true in different contexts.

| GitLab.com | Dedicated or Self-managed | All instances | |

|---|---|---|---|

for user outside of project or group (user.can?(:access_duo_chat)) |

User need to belong to at least one group on Premium or Ultimate tier with duo_features_enabled group setting switched on |

- Instance needs to be on Premium or Ultimate tier - Instance needs to have duo_features_enabled setting switched on |

|

for user in group context (user.can?(:access_duo_chat, group)) |

- User needs to belong to at least one group on Premium or Ultimate tier with experiment_and_beta_features group setting switched on- Root ancestor group of the group needs to be on Premium or Ultimate tier and the group must have duo_features_enabled setting switched on |

- Instance needs to be on Premium or Ultimate tier - Instance needs to have duo_features_enabled setting switched on |

User must have at least read permissions on the group |

for user in project context (user.can?(:access_duo_chat, project)) |

- User needs to belong to at least one group on the Premium or Ultimate tier with experiment_and_beta_features group setting enabled- Project root ancestor group needs to be on Premium or Ultimate tier and project must have duo_features_enabled setting switched on |

- Instance need to be on Ultimate tier - Instance needs to have duo_features_enabled setting switched on |

User must to have at least read permission on the project |

Running GitLab Duo Chat prompt experiments

Before being merged, all prompt or model changes for GitLab Duo Chat should both:

- Be behind a feature flag and

- Be evaluated locally

The type of local evaluation needed depends on the type of change. GitLab Duo Chat local evaluation using the Prompt Library is an effective way of measuring average correctness of responses to questions about issues and epics.

Follow the Prompt Library guide to evaluate GitLab Duo Chat changes locally. The prompt library documentation is the single source of truth and should be the most up-to-date.

Please, see the video (internal link) that covers the full setup.

How a Chat prompt is constructed

All Chat requests are resolved with the GitLab GraphQL API. And, for now, prompts for 3rd party LLMs are hard-coded into the GitLab codebase.

But if you want to make a change to a Chat prompt, it isn't as obvious as finding the string in a single file. Chat prompt construction is hard to follow because the prompt is put together over the course of many steps. Here is the flow of how we construct a Chat prompt:

- API request is made to the GraphQL AI Mutation; request contains user Chat input. (code)

- GraphQL mutation calls

Llm::ExecuteMethodService#execute(code) -

Llm::ExecuteMethodService#executesees that thechatmethod was sent to the GraphQL API and callsLlm::ChatService#execute(code) -

Llm::ChatService#executecallsschedule_completion_worker, which is defined inLlm::BaseService(the base class forChatService) (code) -

schedule_completion_workercallsLlm::CompletionWorker.perform_for, which asynchronously enqueues the job (code) -

Llm::CompletionWorker#performis called when the job runs. It deserializes the user input and other message context and passes that over toLlm::Internal::CompletionService#execute(code) -

Llm::Internal::CompletionService#executecallsGitlab::Llm::CompletionsFactory#completion!, which pulls theai_actionfrom original GraphQL request and initializes a new instance ofGitlab::Llm::Completions::Chatand callsexecuteon it (code) -

Gitlab::Llm::Completions::Chat#executecallsGitlab::Llm::Chain::Agents::ZeroShot::Executor. (code) -

Gitlab::Llm::Chain::Agents::ZeroShot::Executor#executecallsexecute_streamed_request, which callsrequest, a method defined in theAiDependentconcern (code) - (

*)AiDependent#requestpulls the base prompt fromprovider_prompt_class.prompt. For Chat, the provider prompt class isZeroShot::Prompts::Anthropic(code) - (

*)ZeroShot::Prompts::Anthropic.promptpulls a base prompt and formats it in the way that Anthropic expects it for the Text Completions API (code) - (

*) As part of constructing the prompt for Anthropic,ZeroShot::Prompts::Anthropic.promptmakes a call to thebase_promptclass method, which is defined inZeroShot::Prompts::Base(code) - (

*)ZeroShot::Prompts::Base.base_promptcallsUtils::Prompt.no_role_textand passesprompt_versionto the method call. Theprompt_versionoption resolves toPROMPT_TEMPLATEfromZeroShot::Executor(code) - (

*)PROMPT_TEMPLATEis where the tools available and definitions for each tool are interpolated into the zero shot prompt using theformatmethod. (code - (

*) ThePROMPT_TEMPLATEis interpolated into thedefault_system_prompt, defined (here) in theZeroShot::Prompts::Base.base_promptmethod call, and that whole big prompt string is sent toai_request.request(code) -

ai_requestis defined inLlm::Completions::Chatand evaluates to eitherAiGatewayorAnthropicdepending on the presence of a feature flag. On production, we useAiGatewayso this documentation follows that codepath. (code -

ai_request.requestroutes toLlm::Chain::Requests::AiGateway#request, which callsai_client.stream(code) -

ai_client.streamroutes toGitlab::Llm::AiGateway::Client#stream, which makes an API request to the AI Gateway/v1/chat/agentendpoint (code) - We've now made our first request to the AI Gateway. If the LLM says that the answer to the first request is a final answer, we parse the answer and return it (code)

- (

*) If the first answer is not final, the "thoughts" and "picked tools" from the first LLM request are parsed and then the relevant tool class is called. (code) - The tool executor classes also include

Concerns::AiDependentand use the includedrequestmethod similar to howZeroShot::Executordoes (example). Therequestmethod uses the sameai_requestinstance that was injected into thecontextinLlm::Completions::Chat. For Chat, this isGitlab::Llm::Chain::Requests::AiGateway. So, essentially the same request to the AI Gateway is put together but with a differentprompt/PROMPT_TEMPLATEthan for the first request (Example tool prompt template) - If the tool answer is not final, the response is added to

agent_scratchpadand the loop inZeroShot::Executorstarts again, adding the additional context to the request. It loops to up to 10 times until a final answer is reached.

(*) indicates that this step is part of the actual construction of the prompt

Interpreting GitLab Duo Chat error codes

GitLab Duo Chat has error codes with specified meanings to assist in debugging. Currently, they are only logged, but in the future, they will be displayed on the UI.

See the GitLab Duo Chat troubleshooting documentation for a list of all GitLab Duo Chat error codes.

When developing for GitLab Duo Chat, please include these error codes when returning an error and document them, especially for user-facing errors.

Error Code Format

The error codes follow the format: <Layer Identifier><Four-digit Series Number>.

For example:

-

M1001: A network communication error in the monolith layer. -

G2005: A data formatting/processing error in the AI gateway layer. -

A3010: An authentication or data access permissions error in a third-party API.

Error Code Layer Identifier

| Code | Layer |

|---|---|

| M | Monolith |

| G | AI Gateway |

| A | Third-party API |

Error Series

| Series | Type |

|---|---|

| 1000 | Network communication errors |

| 2000 | Data formatting/processing errors |

| 3000 | Authentication and/or data access permission errors |

| 4000 | Code execution exceptions |

| 5000 | Bad configuration or bad parameters errors |

| 6000 | Semantic or inference errors (the model does not understand or hallucinates) |